Data centers, the backbone of the digital economy, are under unprecedented pressure as their energy consumption and heat production soar. Traditionally, these facilities relied on air-based cooling systems — fans, raised floors, and chillers — to maintain optimal server temperatures. While these systems were adequate when server densities were moderate and workloads were predictable, today’s landscape is radically different.

Modern AI models, cloud computing, and real-time analytics demand enormous computational power, generating heat at levels that conventional cooling techniques struggle to dissipate. Moreover, climate change exacerbates the problem, reducing the effectiveness of “free cooling” methods that rely on cooler ambient air. Aging infrastructure further compounds the issue, as legacy data centers often lack the capability to efficiently manage modern, high-density servers. This convergence of factors has led to widespread failures of traditional cooling methods, forcing technology companies to explore radical alternatives.

Table of Contents

AI and the Escalating Heat Problem

The explosion of artificial intelligence has fundamentally altered the thermal dynamics of data centers. Large-scale machine learning models, particularly generative AI systems, require intensive parallel processing that draws immense electrical power. Each processor contributes to an exponential increase in heat output, creating “hot spots” within server racks that air cooling alone cannot manage effectively.

Sasha Lusioni, Head of AI and Climate at Hugging Face, notes that as AI workloads grow, so too must the sophistication of cooling technologies. What was once sufficient — circulating cold air through a data hall — now falls short, as AI workloads push energy consumption per rack into ranges historically unseen. This escalating heat output is not just a technical concern; it is also a financial and environmental burden, as more energy is required to maintain stable operating conditions, contributing to higher costs and carbon emissions.

The Limitations of Conventional Cooling Systems

Air-based cooling systems face inherent limitations when confronting high-density, high-energy workloads. Raised floors and traditional chillers have been the standard, but their efficiency declines as rack density increases. The problem is compounded by global warming, which narrows the window for “free cooling” using outside air. Additionally, mechanical components such as fans, pumps, and compressors are prone to wear and failure, increasing operational risk. Older facilities were never designed to accommodate the enormous heat loads of modern AI workloads, leading to frequent system failures and inefficiencies. As a result, the industry is reaching a point where incremental improvements to existing systems are no longer sufficient, and radical, next-generation solutions are required to ensure reliability, sustainability, and cost-effectiveness.

Space-Based Cooling Innovations

Among the most futuristic approaches is Google’s Project SunCatcher, which explores placing data centers in space. The concept leverages low Earth orbit (LEO) satellites powered by solar energy, taking advantage of the near-zero-temperature vacuum of space for cooling. Heat generated by servers can be radiated directly into space, potentially offering far higher efficiency than terrestrial methods.

The project aims to launch prototype satellites by 2027, demonstrating the feasibility of space-based data centers. While technically challenging and expensive, this approach highlights the lengths to which companies are willing to go to manage thermal constraints. The potential benefits — minimal cooling energy requirements, consistent solar power, and reduced land usage — make it a compelling vision, even as hurdles like launch costs, radiation exposure, and maintenance in orbit remain significant.

Harnessing Ocean Cooling

Before the space concept, Microsoft pioneered a different extreme: underwater data centers. These facilities involve submerging servers in pressure-resistant modules beneath the sea, utilizing cold ocean water as a natural heat sink. The experiment, conducted off the coast of Scotland, demonstrated that subsea cooling could reduce operational energy costs dramatically — estimates suggested up to 80% savings.

However, while thermal efficiency was proven, practical and financial challenges led to the eventual discontinuation of the project. The high costs of deployment, environmental concerns, and difficulties in servicing submerged modules highlighted the trade-offs inherent in such radical approaches. Despite these challenges, the project provided valuable insights into passive cooling methods and underscored the potential of leveraging natural resources for data center efficiency.

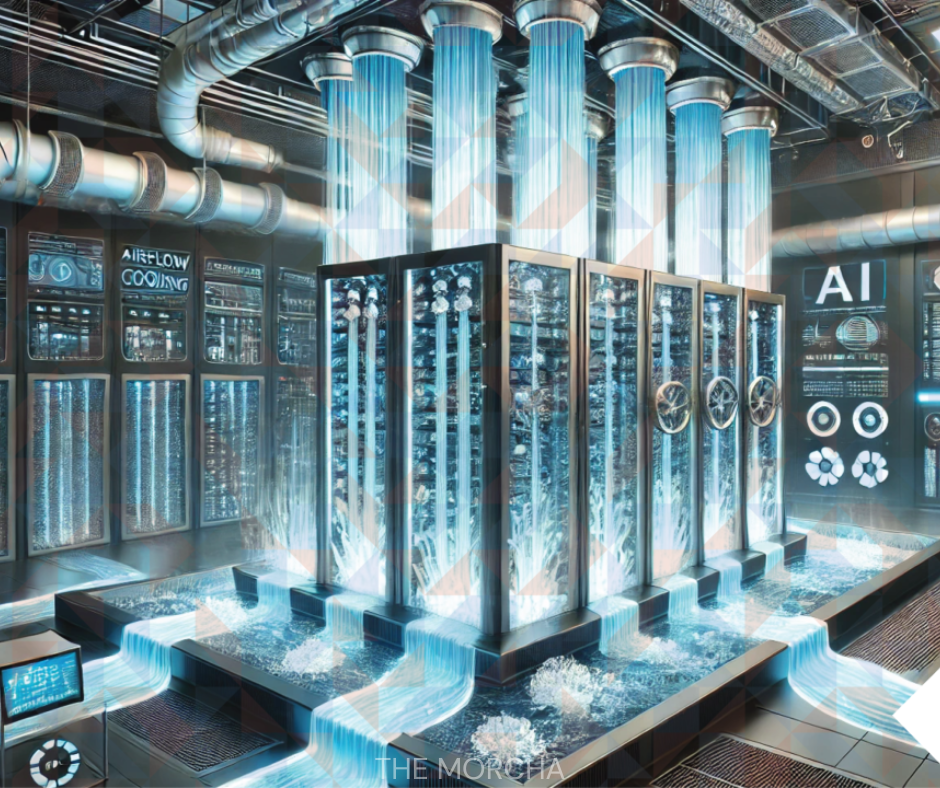

Direct Liquid Cooling Technologies

Liquid cooling represents a transformative shift in data center thermal management. Unlike air, liquids have a much higher heat capacity and can remove heat more efficiently from densely packed servers. Companies such as Iceotope have pioneered direct liquid cooling systems, where engineered dielectric fluids are sprayed directly onto chips and critical components. This method drastically reduces the energy required for temperature control, enables higher rack densities, and minimizes reliance on traditional air conditioning.

Direct liquid cooling also opens the door for more compact, modular server designs. However, engineering challenges remain, including ensuring pump reliability, preventing leaks, and maintaining fluid purity. Despite these hurdles, liquid cooling is increasingly being adopted in high-performance computing environments where conventional cooling fails.

Tackling Heat at the Source

Microfluidic cooling takes thermal management a step further by embedding microscopic channels directly within chip layers or heat spreaders. Coolant flows through these channels, extracting heat from processor cores almost instantaneously. This method allows for ultra-high heat removal efficiency and supports computational densities far beyond the capabilities of air-based or standard liquid cooling systems.

While still in early research and pilot stages, microfluidic cooling has the potential to revolutionize server architecture. By integrating thermal management at the silicon level, chips can self-regulate heat, minimizing hotspots and enhancing performance. It represents a future where cooling is intrinsic to computation, blurring the line between thermal management and processor design.

Reimagining Waste Heat as a Resource

Historically, heat generated by data centers has been treated as a waste product, vented into the atmosphere. However, innovative companies are now exploring ways to repurpose this energy. In the United States, a hotel chain has initiated a project to capture exhaust heat from nearby data centers and redirect it for practical uses such as heating guest rooms, powering laundry operations, and warming swimming pools. This approach not only reduces energy consumption from conventional sources but also transforms data centers into active participants in local energy networks. By converting a liability into a resource, companies can enhance sustainability profiles while providing tangible economic benefits.

District Heating and Urban Integration

In Nordic countries like Sweden and Finland, waste heat from data centers is integrated into municipal heating networks, providing a stable and predictable source of thermal energy for nearby homes and businesses. This approach exemplifies how urban infrastructure can be reimagined around data centers as energy providers. Heat recovery systems, pipelines, and heat pumps allow operators to feed low-grade heat into district networks efficiently. While there are challenges, including seasonal demand fluctuations and initial capital investments, the environmental and economic benefits are significant. By positioning data centers as nodes in energy ecosystems, cities can reduce reliance on fossil fuels and enhance sustainability.

AI-Optimized Cooling Systems

Ironically, artificial intelligence — one of the primary contributors to rising heat levels — is also part of the solution. AI-driven thermal management systems analyze real-time server workloads, predicting heat patterns and adjusting cooling parameters dynamically. Machine learning models optimize fan speeds, coolant flow, and thermal distribution to minimize energy consumption while maintaining safe operating temperatures.

This self-regulating system not only improves efficiency but also reduces the risk of overheating and equipment failure. As AI workloads grow more complex, these intelligent cooling systems are likely to become standard practice in modern data centers, representing a convergence of technology and sustainability.

Economic Implications of Advanced Cooling

The adoption of new cooling technologies carries significant economic implications. While systems like liquid cooling, microfluidics, and heat reuse involve high upfront investment, they offer substantial operational savings over time. Reduced energy consumption lowers utility costs, while efficient heat recovery can offset heating expenses for nearby facilities. Furthermore, compliance with evolving environmental regulations may necessitate such investments, positioning early adopters as industry leaders in sustainability. Balancing capital expenditure against long-term efficiency gains and regulatory incentives is a central consideration for technology companies designing next-generation data centers.

Environmental Benefits and Carbon Footprint Reduction

Beyond economics, advanced cooling technologies have a profound impact on environmental sustainability. Data centers account for an estimated 1–3% of global electricity consumption, with cooling systems comprising a significant portion of this usage. By adopting liquid cooling, microfluidics, and heat reuse strategies, operators can drastically reduce energy demand and associated greenhouse gas emissions. Integrating renewable energy sources, optimizing workload distribution, and using AI-driven thermal controls further amplify these benefits. As governments and regulatory bodies increasingly prioritize sustainability, environmentally conscious data center design is becoming both a competitive advantage and a societal necessity.

Hybrid and Adaptive Systems

The next generation of data centers is likely to feature hybrid cooling architectures, combining air, liquid, and microfluidic systems to manage diverse workloads efficiently. Adaptive cooling systems will use real-time analytics to dynamically allocate resources, adjusting cooling intensity based on server load, ambient temperature, and operational priorities. Distributed and modular designs will allow edge facilities to implement tailored cooling solutions, while mega-scale central data centers adopt innovative strategies like heat recycling or liquid immersion. These hybrid systems offer flexibility, scalability, and resilience, making them critical to meeting future computational demands sustainably.

Data Centers as Energy Nodes

A broader trend is the transformation of data centers from mere consumers of energy to active participants in energy networks. By capturing and distributing waste heat, integrating with smart grids, and participating in demand-response programs, data centers can become energy nodes within urban and regional ecosystems. This reconceptualization aligns with global sustainability goals, reduces operational risk, and creates new revenue opportunities. In essence, data centers are evolving from isolated technical facilities into integral components of modern energy infrastructure.

Understanding Traditional Cooling Limitations

Historically, raised floors, air conditioning, and chillers sufficed to manage data center temperatures. But with modern servers consuming significantly more power per rack, these systems are stretched beyond their limits. Mechanical failures, inefficiency at high densities, and environmental factors like rising ambient temperatures make conventional cooling increasingly unreliable. Even incremental improvements to existing air-based systems fail to address the magnitude of heat produced by today’s AI workloads, forcing companies to rethink cooling from the ground up.

The Role of AI Workloads in Heat Generation

Artificial intelligence, especially large-scale models, has changed the thermal landscape of data centers. Intensive computations require massive parallel processing, generating localized hotspots that conventional cooling cannot manage effectively. AI workloads are not only increasing power consumption but also creating irregular heat patterns that require dynamic, precise cooling solutions. Without adaptation, these patterns can lead to equipment degradation, higher operational costs, and potential system failures.

Climate Change and Its Impact on Cooling

Global warming has reduced the availability of “free cooling” through outside air, particularly in regions experiencing higher average temperatures. As outdoor air becomes less viable for cooling, mechanical chillers must operate more frequently, raising electricity consumption and costs. The combination of environmental factors and higher energy densities creates urgent pressure for innovative cooling technologies that do not rely solely on ambient air temperatures.

Space-Based Data Centers

Google’s Project SunCatcher explores launching data centers into low Earth orbit (LEO) satellites. These satellites leverage the near-zero temperatures of space to radiate heat directly into the vacuum, while solar energy provides a constant power source. Though technically challenging and expensive, space-based data centers demonstrate how radical thinking can address cooling limitations. Potential benefits include minimal energy use for cooling, reduced land requirements, and continuous solar power. Challenges include launch costs, radiation protection, and maintenance in orbit.

Microsoft’s Ocean Experiment

Microsoft experimented with underwater data centers by submerging servers off the coast of Scotland. The cold ocean acted as a natural heat sink, reducing cooling costs by up to 80%. Despite success in thermal management, deployment and maintenance costs, along with environmental concerns, led to discontinuation. This project highlighted the potential for passive cooling methods and the advantages of leveraging natural resources for efficient temperature control.

Direct Liquid Cooling

Direct liquid cooling sprays or immerses critical server components in engineered dielectric fluids that absorb heat much more efficiently than air. Companies like Iceotope are leading this field, enabling higher server density, reducing energy use, and minimizing reliance on traditional chillers. Engineering challenges include pump reliability, fluid purity, and system maintenance. This method is particularly valuable in high-performance computing environments where heat densities are extreme.

Advanced Thermal Control

Microfluidic cooling embeds microscopic channels within chip layers, allowing coolant to flow directly over hotspots. This technology supports extremely high power densities while maintaining precise temperature control. Though primarily in research and pilot phases, microfluidic cooling could revolutionize how processors self-manage heat, creating highly integrated systems where cooling and computation occur simultaneously.

Hybrid Cooling Systems

Future data centers may combine air, liquid, and microfluidic cooling in hybrid architectures. These adaptive systems can dynamically distribute cooling based on workload, environmental conditions, and energy efficiency goals. Hybrid designs offer flexibility, scalability, and resilience, supporting both edge and mega-scale data centers while maximizing energy efficiency and reducing operational risk.

AI-Optimized Thermal Management

AI-driven cooling systems monitor server workloads in real-time, predicting heat patterns and adjusting cooling parameters dynamically. Machine learning models optimize fan speeds, coolant flows, and thermal distribution, minimizing energy consumption while ensuring stable operation. This integration of AI into cooling itself exemplifies a feedback loop where AI workloads generate heat, and AI manages that heat, creating self-regulating, energy-efficient environments.

Repurposing Waste Heat

Data centers produce enormous amounts of heat, traditionally treated as waste. Innovative initiatives now redirect this heat for practical applications such as heating buildings, swimming pools, and industrial processes. This approach transforms data centers from energy consumers into active contributors to local energy ecosystems, improving sustainability while creating economic value.

District Heating and Urban Integration

In countries like Sweden and Finland, data center heat is integrated into municipal heating networks, providing thermal energy to homes and businesses. Heat recovery pipelines and heat pumps allow low-grade waste heat to meet urban heating needs efficiently. While challenges exist, including seasonal demand fluctuations and infrastructure costs, the benefits for energy efficiency and sustainability are substantial.

Economic Impacts of Advanced Cooling

Advanced cooling technologies require significant upfront investments but offer long-term savings. Reduced electricity consumption, efficient heat reuse, and compliance with environmental regulations justify capital expenditure. Organizations that adopt innovative cooling early can gain a competitive advantage by reducing operational costs, carbon footprints, and exposure to regulatory pressures.

Environmental Benefits and Carbon Reduction

Cooling accounts for a major portion of data center energy usage, contributing to global electricity demand and greenhouse gas emissions. By adopting liquid cooling, microfluidics, and heat reuse systems, operators can dramatically reduce energy consumption and environmental impact. Renewable energy integration and AI-based optimization amplify these benefits, aligning data center operations with global sustainability goals.

Toward a Cooler, Smarter Future

The global demand for computational power is unlikely to slow, and neither is the heat generated by data centers. Traditional cooling methods, once sufficient, are no longer adequate. Innovations ranging from space-based satellites to subsea servers, direct liquid cooling, microfluidics, and waste heat reuse illustrate the breadth of approaches under consideration. Alongside these technical innovations, AI-driven optimization and hybrid cooling systems offer practical solutions for the present and future.

The evolving vision sees data centers not only as computing engines but as intelligent, energy-aware infrastructure capable of contributing to environmental sustainability. As the industry embraces these transformations, the future promises a cooler, smarter, and more resource-efficient era for global data infrastructure.